With a 2008R2 -> 2012 upgrade and a massive storage migration under my belt I was feeling pretty good about my competency. All the data was moved off the old drives but they were still online. To get these drives offline a few steps were needed.

I knew the SQL Service was still dependent on these drives and going under the cluster covers into disk management to turn them off would be a bad idea. I carefully removed them from each instance service in the cluster manager. This removes the dependencies and plops the the cluster disks into unused storage. Then I got into cluster storage and deleted these old drives.

When drives are added, you have to add a dependency in the SQL Service to it. Otherwise, when failing over, drives will get yanked out from underneath SQL before it’s done gracefully shutting down. So in my infinite wisdom, I reworked all of the dependencies.

It went something like this:

bring the IP online

bring the name online

bring a mount point drive online

bring all the other drives online

bring SQL online

bring the agent and AS online

So two weeks go by and I have to do a failover now because the old SAN had to be removed from MPIO. This unfortunately requires a restart. So at 5am I logged in to click a couple buttons and hopefully go back to bed. No suck luck. The failover looked so graceful taking all the services offline in the correct order, then bringing everything online right up until it hit the SQL Server. It tried for about 1 second and failed.

If you haven’t noticed, cluster events are useless in scenarios like this. No detail, I assume this is mainly for reporting epic problems. I got into the event log, nothing there except the useless “SQL Failed” message in the system log. I went right to the SQL Logs but noticed the last time it was updated was went the agent went offline. No logs? That is bizarre.

I tried several other ways to start the SQL Service. It wouldn’t start on ANY node which didn’t make sense because the only change we made was to unmap a single node full of old SAN drives. After about an hour I decided to make my way into work and alert the authorities. I was assuming I would need some help from Microsoft at this point but I don’t have direct access to open tickets.

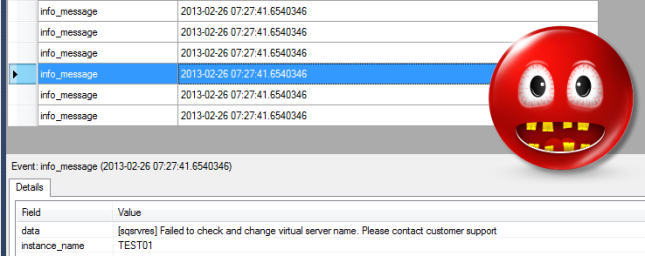

Fortunately, I double checked the logs. In SQL Configuration Manager I noticed there is a Dump location. I found some .xel files in this location and opened the newest up in SSMS or VS shell.

“Failed to check and change virtual server name. Please contact customer support.”

This may not sound too clear to you right off the bat, but it was clear to me. I remembered there being a dependency in the SQL Service to the virtual name before. But this time I had attempted to chain these dependencies. I figured that if SQL was dependent on the drive, and the drive dependent on the name, everything would come up in the right order. It appeared so, but apparently SQL really needs that dependency on the name. I added it and SQL came online shortly before 8am. Moral of the story, test any cluster dependency changes (duh).